Run this notebook online: or Colab:

3.5. The Image Classification Dataset¶

In sec_naive_bayes, we trained a naive Bayes classifier,

using the MNIST dataset introduced in 1998

[LeCun et al., 1998]. While MNIST had a good run as a

benchmark dataset, even simple models by today’s standards achieve

classification accuracy over 95% making it unsuitable for distinguishing

between stronger models and weaker ones. Today, MNIST serves as more of

sanity checks than as a benchmark. To up the ante just a bit, we will

focus our discussion in the coming sections on the qualitatively

similar, but comparatively complex Fashion-MNIST dataset

[Xiao et al., 2017], which was released in 2017.

%load ../utils/djl-imports

%load ../utils/StopWatch.java

%load ../utils/ImageUtils.java

import ai.djl.basicdataset.cv.classification.*;

import ai.djl.training.dataset.Record;

import java.awt.image.BufferedImage;

import java.awt.Graphics2D;

import java.awt.Color;

3.5.1. Getting the Dataset¶

Just as with MNIST, DJL makes it easy to download and load the

Fashion-MNIST dataset into memory via the FashionMnist class

contained in ai.djl.basicdataset. We briefly work through the

mechanics of loading and exploring the dataset below. Please refer to

sec_naive_bayes for more details on loading data.

Let us first define the getDataset() function that obtains and reads

the Fashion-MNIST dataset. It returns the dataset for the training set

or the validation set depending on the passed in usage

(Dataset.Usage.TRAIN for training and Dataset.Usage.TEST for

validation). You can then call getData(manager) on the dataset to

get the corresponding iterator. It also takes in the batchSize and

randomShuffle which dictates the size of each batch and whether to

randomly shuffle the data respectively.

int batchSize = 256;

boolean randomShuffle = true;

FashionMnist mnistTrain = FashionMnist.builder()

.optUsage(Dataset.Usage.TRAIN)

.setSampling(batchSize, randomShuffle)

.optLimit(Long.getLong("DATASET_LIMIT", Long.MAX_VALUE))

.build();

FashionMnist mnistTest = FashionMnist.builder()

.optUsage(Dataset.Usage.TEST)

.setSampling(batchSize, randomShuffle)

.optLimit(Long.getLong("DATASET_LIMIT", Long.MAX_VALUE))

.build();

mnistTrain.prepare();

mnistTest.prepare();

NDManager manager = NDManager.newBaseManager();

Fashion-MNIST consists of images from 10 categories, each represented by 60k images in the training set and by 10k in the test set. Consequently the training set and the test set contain 60k and 10k images, respectively.

System.out.println(mnistTrain.size());

System.out.println(mnistTest.size());

60000

10000

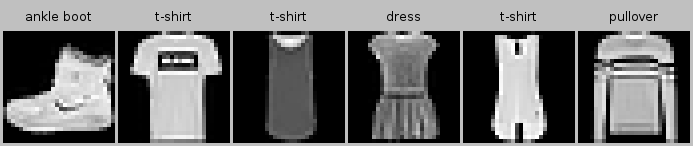

The images in Fashion-MNIST are associated with the following categories: t-shirt, trousers, pullover, dress, coat, sandal, shirt, sneaker, bag and ankle boot. The following function converts between numeric label indices and their names in text.

// Saved in the FashionMnist class for later use

public String[] getFashionMnistLabels(int[] labelIndices) {

String[] textLabels = {"t-shirt", "trouser", "pullover", "dress", "coat",

"sandal", "shirt", "sneaker", "bag", "ankle boot"};

String[] convertedLabels = new String[labelIndices.length];

for (int i = 0; i < labelIndices.length; i++) {

convertedLabels[i] = textLabels[labelIndices[i]];

}

return convertedLabels;

}

public String getFashionMnistLabel(int labelIndice) {

String[] textLabels = {"t-shirt", "trouser", "pullover", "dress", "coat",

"sandal", "shirt", "sneaker", "bag", "ankle boot"};

return textLabels[labelIndice];

}

We can now create a function to visualize these examples. Don’t worry too much about the specifics of visualization. This is simply just to help intuitively understand the data. We essentially read in a number of datapoints and convert their RGB value from 0-255 to between 0-1. We then set the color as grayscale and then display it along with their labels in an external window.

// Saved in the FashionMnistUtils class for later use

public static BufferedImage showImages(

ArrayDataset dataset, int number, int width, int height, int scale, NDManager manager) {

// Plot a list of images

BufferedImage[] images = new BufferedImage[number];

String[] labels = new String[number];

for (int i = 0; i < number; i++) {

Record record = dataset.get(manager, i);

NDArray array = record.getData().get(0).squeeze(-1);

int y = (int) record.getLabels().get(0).getFloat();

images[i] = toImage(array, width, height);

labels[i] = getFashionMnistLabel(y);

}

int w = images[0].getWidth() * scale;

int h = images[0].getHeight() * scale;

return ImageUtils.showImages(images, labels, w, h);

}

private static BufferedImage toImage(NDArray array, int width, int height) {

System.setProperty("apple.awt.UIElement", "true");

BufferedImage img = new BufferedImage(width, height, BufferedImage.TYPE_BYTE_GRAY);

Graphics2D g = (Graphics2D) img.getGraphics();

for (int i = 0; i < width; i++) {

for (int j = 0; j < height; j++) {

float c = array.getFloat(j, i) / 255; // scale down to between 0 and 1

g.setColor(new Color(c, c, c)); // set as a gray color

g.fillRect(i, j, 1, 1);

}

}

g.dispose();

return img;

}

Here are the images and their corresponding labels (in text) for the first few examples in the training dataset.

final int SCALE = 4;

final int WIDTH = 28;

final int HEIGHT = 28;

showImages(mnistTrain, 6, WIDTH, HEIGHT, SCALE, manager)

3.5.2. Reading a Minibatch¶

To make our life easier when reading from the training and test sets, we

use the getData(manager). Recall that at each iteration,

getData(manager) reads a minibatch of data with size batchSize

each time. We then get the X and y by calling getData() and

getLabels() on each batch respectively.

Note: During training, reading data can be a significant performance bottleneck, especially when our model is simple or when our computer is fast.

Let us look at the time it takes to read the training data.

StopWatch stopWatch = new StopWatch();

stopWatch.start();

for (Batch batch : mnistTrain.getData(manager)) {

NDArray x = batch.getData().head();

NDArray y = batch.getLabels().head();

}

System.out.println(String.format("%.2f sec", stopWatch.stop()));

0.21 sec

We are now ready to work with the Fashion-MNIST dataset in the sections that follow.

3.5.3. Summary¶

Fashion-MNIST is an apparel classification dataset consisting of images representing 10 categories.

We will use this dataset in subsequent sections and chapters to evaluate various classification algorithms.

We store the shape of each image with height \(h\) width \(w\) pixels as \(h \times w\) or

(h, w).Data iterators are a key component for efficient performance. Rely on well-implemented iterators that exploit multi-threading to avoid slowing down your training loop.

3.5.4. Exercises¶

Does reducing the

batchSize(for instance, to 1) affect read performance?Use the DJL documentation to see which other datasets are available in

ai.djl.basicdataset.